HHO is a population-based, gradient-free optimization algorithm. In 2019, Future Generation Computer Systems (FGCS) has published the HHO algorithm. The main inspiration of HHO is the cooperative behavior and chasing style of Harris’ hawks in nature called “surprise pounce”.

Team Members:

Background Facts

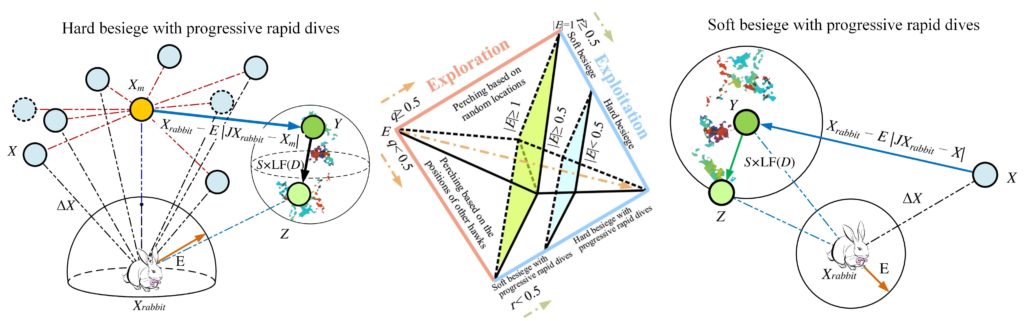

Harris hawks can reveal a variety of chasing patterns based on the dynamic nature of scenarios and escaping patterns of the rabbit.Mathematical model and structure

The following features can theoretically assist us in realizing why the proposed HHO can be beneficial in exploring or exploiting the search space of a given optimization problem:

- Escaping energy parameter has a dynamic randomized time-varying nature, which can further boost the exploration and exploitation patterns of HHO. This factor also requires HHO to perform a smooth transition between exploration and exploitation.

- Different diversification mechanisms with regard to the average location of hawks can boost the exploratory behavior of HHO in initial iterations.

- Different LF-based patterns with short-length jumps enhance the exploitative behaviors of HHO when conducting a local search.

The progressive selection scheme assists search agents to progressively improve their position and only select a better position, which can improve the quality of solutions and intensification powers of HHO during the course of iterations. - HHO utilizes a series of searching strategies based on and parameters and then, it selects the best movement step. This capability has also a constructive impact on the exploitation potential of HHO.

The randomized jump strength can assist candidate solutions in balancing the exploration and exploitation tendencies. - The use of adaptive and time-varying parameters allows HHO to handle difficulties of a search space including local optimal solutions, multi-modality, and deceptive optima.

Source codes of HHO algorithm

- Matlab source codes of HHO are publicly available here

- Latex codes of HHO section including the Pseudo-code are publicly available here

- Visio files of figures in HHO section are publicly available here

We will always be happy to cooperate with you if you have any new idea or proposal on the HHO algorithm. You can contact us or first author Dr. Ali Asghar Heidari. Let’s enjoy finding the optimal solutions to your real-world problems.

Thanks

Your welcome my friend

(Please ignore my previous comment, because some text are deleted due to the use of less than and greater than symbol)

First of all, thank you for sharing this paper and source code. It is an excellent algorithm!

Secondly, pardon me if I’m wrong, but I’ve found a different application on the perching strategies (Eq. 1). In the paper, the formula with rabbit location (Xrabbit) is used on perching based on other family members (q is less than 0.5), but in the Matlab code, it is used on perching on a random tall tree (q is greater than or equals to 0.5).

Please kindly respond, as it would help me with my research. Thank you.

Hi, thanks for your interest, in one condition based on q, it is perching based on other family members, and in other condition based on q, it is perching on a random tall tree.

Hello Ali,

It is very interesting and effective algorithm requiring logic, skill and hard work to understand. But finally it will pay well in latest publications.

Thanks for sharing the code and research paper.

Great Work with Lots of Efforts !!!

Thank you so much for your feedback, I think you will find excellent results with HHO. Hope bests for you Dr. Devdutt

Thanks very much for you sharing the paper “Harris hawks optimization: Algorithm and applications” in Future Generation Computer Systems. It is an excellent optimization algorithm. I am very interesting in it. Furthermore, Thanks very much for you sharing the codes of the paper. From “http://www.alimirjalili.com/HHO.html and http://www.evo-ml.com/2019/03/02/hho”, I obtained the codes of some testing functions (F1-F23) of this paper, but I don’t find the codes of F24-F29 and the codes of solving the Engineering benchmark sets. I would like to see if you can offer them to me?

Thank you for your understanding and kind assistance. Looking forward to your earliest reply with your good convenience.

Thank you so much Xia Li, happy to see your interests and attention in our HHO algorithm. Currently, the codes of F24-F29 and constrained versions of HHO in different languages are not publicly available and we will release as soon as possible. In any case, first, you can compare your results on constrained cases with available results on paper. You can follow the well=known and public penalty method to handle constraints. For F24-F29, you can find them on the website of CEC competitions.

I hope you reach favorable results with HHO.

Hello ali;

I work in woa algorithm.can you send information about exploration and exploitation in this algorithm and where is use this method?

Thankyou

You can refer to if and then rules and those lines and initials are those used for global search

is it possible to use this optimization in power system problems

yes sure, you can apply this method to any problem, if you develop your objective function and insert it as an input to the HHO.

Thanks for your nice feedback, we appreciate it

Excellent optimization algorithm , than you for sharing codes, and paper

thanks for interests

Excellent EA. Congratulations to Ali for writing such great EA. Good luck

Thank you so much. We appreciate your feedback and interest to our work.

In the equations, it should be made clear which are vectors and scalars. One example iteration if provided will be highly helpful.

sure, thanks for your constructive suggestion

First, the content style is pleasing: text, pictures, videos, embedded PDF papers are clear, neat and beautiful.

Secondly, the content is concise and clear.

Finally, the best thing is that the author gives us free downloads of all the resources involved in the paper.

The work of conscience, praise for the author Ali Asghar Heidari !

Give the thumbs-up!

Thank you so much for your interests.